Do you Trust your Data?

Question 1: Would you buy a 100.000$ car, if you can’t trust the quality of the petrol you fill it up with?

Question 2: Would you implement a high-end marketing automation tool if you can’t trust the data you put into it?

While you have no control over the quality of Petrol, you CAN control your data. Increase the data quality and build trust!

Implementing salesforce or another Marketing Automation solution?

When implementing a Marketing Automation solution, like ExactTarget, Marketo, Eloqua or HubSpot the main objectives are often to provide each lead/client with a unique interaction process, called: the customer journey.

1-to-1 communication will provide the lead/client with the impression of having communication which is

Relevant, Conversational and Engaging.

But if your Marketing Automation solution shall replace the attentive sales rep. the data being available for the automated work-flows to work, needs to be trustworthy.

Trust is something you build

Recently I attended a salesforce conference with the Managing Director from T-Systems, a German global IT company, as the Keynote speaker.

He was claiming, in front of a large German crowd, that the T in T-systems was for Trust. He received a big friendly laugh.

But T-Systems are trustworthy and why?

Because history have shown that they are reliable, they cover a complete range of services and expertise and their service delivery is consistent over the years.

Their clients know that, so they return.

Trust is something you build, not something you buy.

You need a blueprint of your data, and you need to track and show how this blueprint improves over time.

What you need are Data Quality Metrics.

Separating Trustworthy data from Poor Data?

In an ideal world your data should be Reliable, Complete and Consistent, but reality often shows that it is not.

On Average: Only 33% of lead data is target-able due to lack of combinational segmentation data.

Identify your Data Quality Metrics

What to measure varies from business to business, but have you thought about this:

- How well your records are populated on critical segmentation fields, geo-location, job-role, functional area, industry, and company size ?

- And whether the values of these fields are consistent (standardized and normalized).

- Completeness of contact data fields like name, address, email, phone etc.

- Completeness of historical behavior like previous product interest, last purchase etc.

- How many of your record are truly mail-able, phone-able, email-able?

- What is the number of potential duplicate records? By Owner/ by source?

- How old your records are?

- How big a portion of your lead data is actively being used in campaigning, and why?

- What is the Volume of new records being added by source/origin on a daily/weekly/monthly basis

In other words: ou can express poor data in various ways, but it all comes down to whether the data suits and supports the processes which ‘consumes’ the data.

Think of it, as a way to label your data records, in terms of describing what you can use it for (target-group) and what it is missing to become a target.

Data, Records and Fields serves a purpose

Data completeness is a key to choosing the right records.

But your data isn’t complete, and not all sources provides reliable data, -that is normal.

-And I’m sure you only have limited resources to improve the data quality and thus the effectiveness of your campaigns.

So what to should you do?

Go back to basics; what is the purpose of the data?

Often, when data is being migrated from various systems into one common database, the data were being used for different purposes in each of the original data sources.

Now, when the data is being consolidated, the data no longer serves one purpose only, -but many purposes at a time. Identifying the purposes on your data and measure to which extend each record and field fulfil the purpose.

You can actually put in place a metric/score to calculate this.

This score will help you understand the value of your data, and where to invest your data cleaning efforts.

Categorize your data based on what it can be used for

Assume that you bring together

1) a (e)mailing list from a news letter which you send out regularly,

2) a list of clients which have made purchases with you in the last 3 years, and

3) a list of people who have registered themselves on our website (for a download, a pricing enquiry, or a webinar).

The challenge here is that your (e)mail list only contains the email address, and not much details on the person.

Your web-leads will most likely contain incomplete or inaccurate data since users often are hesitant to give away too much information on a web-site.

Only your client data may be complete. But since you mainly used the client data invoicing purposes, you may have the address of the HQ, but not the address of the actual location where the person works.

You should prepare to match and merge the duplicate entries in order to link the data from the different sources together. But first you first need to consider standardizing and harmonizing the data to improve the matching rate.

Once you have the target-groups identified you can nurture each target-group (1-7) individually, -making sure you spend your effort on those records which represents most business value (up-sell/cross-sell/multi-touch leads).

Get Rid of Garbage

What you may realize now, is that a large part of your data isn’t very useful or valuable. Be honest, it looks like garbage.

Garbage in – Garbage out

It is important to identify this part of your dataset and either scrap it or put it aside.

You may be wasting a lot of time fixing these records delaying the availability of business-critical records.

Leave this for when you have more time. -Or wait for when you have automated processes in place to enrich or clean your data. Some of your “bad” data may get better due to the algorithms, and that is just great. But if you try to develop algorithms for all the exceptions you will be wasting a lot of time.

-And if you include this “Bad” data in your campaigns, will not only lower the response rate on your campaigns. You will also risk including leads which definitely are not a target. -And you risk ruining an existing relationship by e.g. sending promotion sign-up offers to existing clients.

Building Trust through Transparency?

To build trust in data you need transparency, and for transparency you need metrics as discusses above. -And most important you need a good track history of your metrics.

Once you have your metrics identified and you start collecting them through dashboards or other reports: share them with your users!

“It is not a shame to show that the situation isn’t perfect; it is a shame not to show what you do about it.”

Like salesforce who openly shows the system status for all their servers (http://www.trust.salesforce.com/), apply a concept of building trust through transparency.

Think of the salesforce serves as representing different subsets of your data. Run regular scans of your data to reclassify your records.

You can now show progress over time, and if you want to go deeper making drill-downs: For each subset run consistency check on critical fields like, country, state, postcode, city, phone, email, website etc, and do not forget the pick-list fields.

(Salesforce allows pick-list fields to be populated with values different from the available list of values when data is imported through the API or using other type of integration tools)

Salesforce has provided a basic Data Quality Analysis Dashboard which may be a good start or at least a source of inspiration: https://appexchange.salesforce.com/listingDetail?listingId=a0N300000016cshEAA

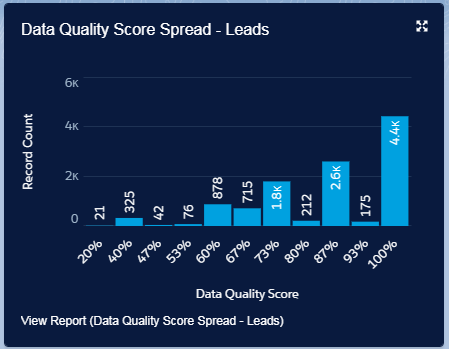

In the DataTrim Data Laundry we are taking this a step further and adding Data Quality Scores for each record, allowing you to dig into the details. See more here: DataTrim Data Laundry: Data Quality Scores, Indicators and Dashboards

Be pragmatic, it will never be perfect.

Consider the quick wins, where you get most value for the effort you put in. Working with high volumes is always time consuming and costly, so take time to invest your time on the data which represents the most value/opportunity for you.

Let your analysis and your data quality metrics focus on separating good data from poor data, and based on this you can start identifying and prioritizing the treatments you want to apply to make the poor data good and the good data better.

Learn More about awesome data

Contact Us

Please do not hesitate to reach out to us, we are happy to discuss your needs, and see how our solution can address YOUR challenges.

DataTrim helps companies and organizations worldwide in improving and maintaining a high level of Data Quality.

The DataTrim Data Laundry improves the reliability completeness and consistency of your data by applying a set of data cleaning treatments which is called The Data Laundry.

DataTrim’s Solutions adds experience based data cleaning processes to lead management, marketing automation, customer support and account management processes in salesforce and create valuable impact on the day-to-day usage and productivity in a simple-to-use, collaborative and cost effective way.